Since I've been playing with ISO images a lot lately (see posts

tagged: pxe), I thought I'd take a look at making it easier

to access their contents, since manually mounting and unmounting them gets

to be a drag. It turns out than an Automounter is just

what the doctor ordered - a service than will mount a filesystem on demand.

Typically, you'd see automounters mentioned in conjunction with physical CD

drives, floppy drives, or NFS mounts - but the idea works just as well for

ISO files. This way you can have available both the original ISO image and

its contents - but without the contents taking up any additional space.

For FreeBSD, the amd utility will act as our automounter, on

Linux systems amd is an option too, but another system called autofs

seems to be widely used there - perhaps I'll take a look at that in another

post.

Let's start with the desired end result ...

Directory Layout

On my home server I'd like to have this directory layout:

/data/iso/

images/

openbsd-4.9-i386.iso

ubuntu-10.04.3-server-amd64.iso

ubuntu-11.04-server-amd64.iso

.

.

.

/data/iso/contents will be where the image contents will be accessible

on-the-fly, by directory names based on the iso file names, for example:

/data/iso/

contents/

openbsd-4.9-i386/

4.9/

TRANS.TBL

etc/

ubuntu-10.04.3-server-amd64/

README.diskdefines

cdromupgrade

dists/

doc/

install/

isolinux/

md5sum.txt

.

.

.

ubuntu-11.04-server-amd64/

.

.

.

Mount/Unmount scripts

amd on FreeBSD doesn't deal directly with ISO files, so we need a couple

very small shell scripts than can mount and unmount the images. Let's call

the first one /local/iso_mount :

#!/bin/sh

mount -t cd9660 /dev/`mdconfig -f $1` $2

It does two things: first creating a md device based on the given iso

filename (the first argument), and mounting the md device at the specified

mountpoint (the second argument). Example usage might be:

/local/iso_mount /data/iso/images/ubuntu-11.04-server-amd64.iso /mnt

The second script we'll call /local/iso_unmount

#!/bin/sh

unit=`mdconfig -lv | grep $1 | cut -f 1`

num=`echo $unit | cut -d d -f 2`

umount /dev/$unit

sleep 10

mdconfig -d -u $num

It takes the same parameters as iso_mount. (the sleep call is a bit

hackish, but the umount command seems a bit asychronous, and it doesn't

seem you can destroy the md device immediately after umount returns - have

to give the system a bit of time to finish with the device) To undo our

test mount above would be:

/local/iso_unmount /data/iso/images/ubuntu-11.04-server-amd64.iso /mnt

amd Map File

amd is going to need a map file, so that when given a name of a

directory that something is attempting to access, it can lookup a location

of where to mount it from. For our needs, this can be a one-liner we'll

save as /etc/amd.iso-file

* type:=program;fs:=${autodir}/${key};mount:="/local/iso_mount /local/iso_mount /data/iso/images/${key}.iso ${fs}";unmount:="/local/iso_unmount /local/iso_unmount /data/iso/images/${key}.iso ${fs}"

A map file is a series of lines with

<key> <location>[,<location>,<location>,...]

In our case we've got the wildcard key *, so it'll apply to anything we

try to access in /data/iso/contents/, and the location is a

semicolon-separated series of directives. type:=program indicates we're

specifying mount:= and unmount:= commands to handle this location. ${key}

is expanded by amd to be the name of the directory we tried to access.

amd Config File

I decided to use a config file to set things up rather than doing it all

as commandline flags, so this is my /etc/amd.conf file:

[ global ]

log_file = syslog

[ /data/iso/contents ]

map_name = /etc/amd.iso-file

Basically telling amd to watch the /data/iso/contents/ directory, and

handle attempts to access it based on the map file /etc/amd.iso-file. Also

set logging to go to syslog (typically you'd look in /var/log/messages)

Enable it and start

Added these lines to /etc/rc.conf

amd_enable="YES"

amd_flags="-F /etc/amd.conf"

Fire it up with:

service amd start

You should be in business. Unfortunately, if you try

ls /data/iso/contents

the directory will initially appear empty, but if you try

ls /data/iso/contents/openbsd-4.9-i386

you should see a listing of the image's top-level contents (assuming you

have a /data/iso/images/openbsd-4.9-i386.iso file). Once

an image has been automounted, you will see it in ls /data/iso/contents

Check the mount

If you try:

mount | grep amd

you'll probably seem something like:

/dev/md0 on /.amd_mnt/openbsd-4.9-i386 (cd9660, local, read-only)

The cool thing is, after a couple minutes of inactivity, the mount will

go away, and /data/iso/contents will appear empty again.

Manually unmount

The amq utility lets you control the amd daemon, one possibility

being to request an unmount to happen now, with for example:

amq -u /data/iso/contents/openbsd-4.9-i386

Conclusion

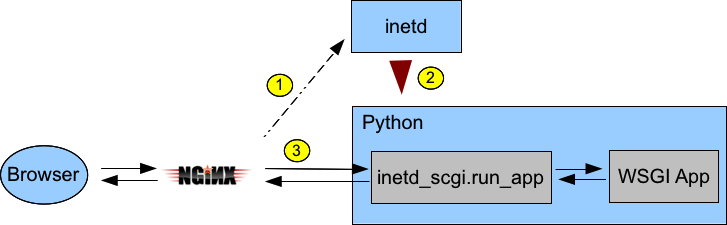

That's the basics. Now if you're setting up PXE booting and

point your Nginx server for example to share /data/iso, you'll be able

to reference files within the ISO images, and they'll be available as

needed.